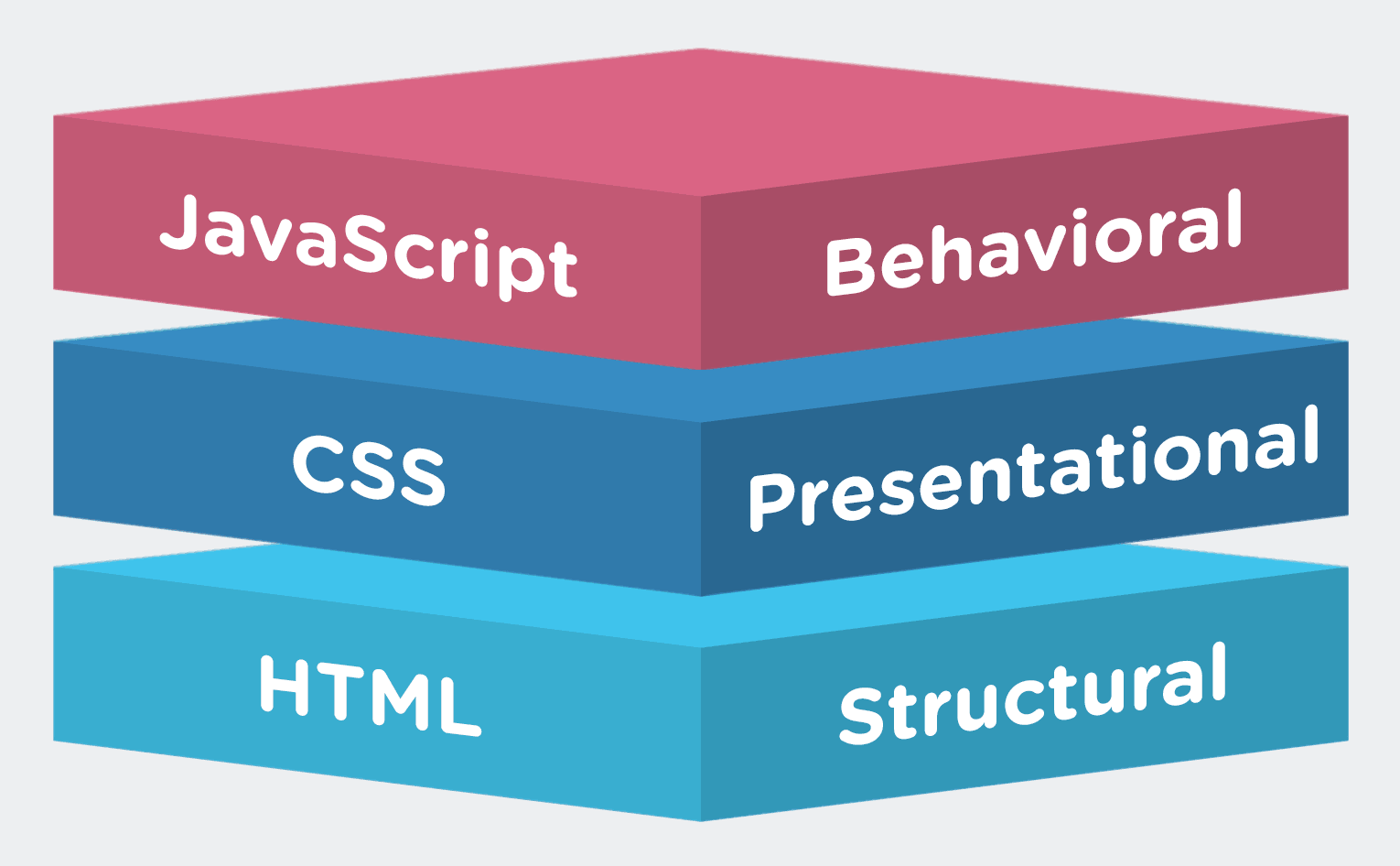

Progressive enhancement is a layered approach for building websites using HTML for content, CSS for presentation, and JavaScript for interactivity. If for some reason JavaScript breaks, the site should still work and look good. If the CSS doesn’t load correctly, the HTML content should still be there with meaningful hyperlinks. And even if the HTML is malformed, browsers are smart enough to continue rendering what they can. Progressive enhancement typically yields a result that is robust, fault tolerant, and accessible. People that have the latest devices will get the most progressively enhanced experience, and people on slow connections or less capable devices will still be able to access the information. Websites don’t need to look the same in every browser, they just need to deliver core content and functionality.

If you’ve never heard of progressive enhancement before, then this probably all sounds great. However, in the age of single-page JavaScript MVC frameworks (like Angular, Ember, Backbone, and more) this strategy for building websites has come into question. Should we abandon progressive enhancement and accept that JavaScript is a requirement for websites now? Or, is progressive enhancement more important than ever?

There have been many arguments about this issue on both sides. People like Tom Dale (one of the creators of Ember) thinks progressive enhancement is dead. Others like Jake Archibald (developer advocate at Google) believes progressive enhancement still matters. Whenever a long-standing idea comes into question, it’s worth examining the context in which that idea was born. Let’s take a look at where progressive enhancement started, where it is today, and then try to decide if it’s useful in the future.

Contents

The Past: Progressive Enhancement is Born

Progressive enhancement has been around since at least 2003. Back then, the web was still transitioning to using CSS instead of table-based layouts. JavaScript was an untamed beast, with exciting power but unpredictable behavior. Progressive enhancement provided an approach that allowed web professionals to deliver content everywhere and still take advantage of newer features where possible.

It was during this time that the idea of web “applications” started to become popularized (although nothing actually changed, they’re still just websites). The demand for dynamic pages connected to databases couldn’t be met with JavaScript alone, so server-side application programming became paramount. In a framework like Ruby on Rails, HTML is constructed on the server and then delivered to the client. When you visit a friend’s profile on a social network, for example, the server uses a “profile” template and fills in the blanks (like the person’s name, photo, and so forth). Even with the advent of AJAX towards the middle of the decade, server-side programming still dutifully took requests and delivered the necessary data.

The Present: Browsers become Application Runtimes

Rendering HTML on the server is still hugely popular, but ever since AJAX, browsers have become increasingly capable of running JavaScript. In fact, they are so capable, that now MVC JavaScript frameworks like Angular, Ember, and Backbone, can generate HTML directly in the browser. At the extreme end of the spectrum, some web pages are being written in pure JavaScript. The popularity of these frameworks is increasing rapidly enough that many job posts now expect some experience.

But wait a second… If people are generating HTML with JavaScript running in the browser, doesn’t that leave progressive enhancement in the dust? And even if it does, nearly everyone has JavaScript in their browser, so what’s the worry?

Personally, I’m not worried about users disabling JavaScript (it’s not even an option in some browsers now). I’m concerned that progressive enhancement is being abandoned recklessly without first examining the potential consequences. There are special exceptions where there is no sensible fallback, as in the case of action games written in JavaScript (unless you’re building a text-based adventure). However, if your site is intended to help people manipulate data in some way, think about what that might look like in HTML before immediately diving into pure JavaScript. It might be perfectly feasible to produce well-structured pages with stylish interactive enhancements layered on top.

HTML and CSS are both declarative languages. Sometimes people don’t like them because they lack features like variables, control structures, objects, reusable templates, and more. However, their simplicity is their genius. Sir Tim Berners Lee (creator of the world wide web) wrote about this simplicity and called it the principle of least power. Even with the most optimized and well tested code (which doesn’t always happen), complicated programs written in JavaScript have lots more that can fail. HTML and CSS simply have less stuff that can go wrong, so if the JavaScript does break, there’s still a working solution in place. Christian Heilmann used a hilarious Mitch Hedberg quote to draw an analogy: When an escalator breaks, it becomes stairs. Websites should be able to partially break and still allow you to get things done.

I’m not saying don’t use JavaScript; I’m saying use JavaScript when it makes sense. Every language can be abused, such as HTML tables for layout or CSS 3D transforms that use non-semantic DOM elements as geometry. However, just because we can do something doesn’t mean we should. If there’s no alternative, then so be it, but in many cases there is a sensible layered solution.

The Future: The Great Unknown

There are different browsers, operating systems, and hardware. There are people that use assistive devices to access web content. There are software robots that crawl the web in search of information. There are fast connections and slow connections, smart watches, smart glasses, smart cars, voice-activated digital assistants, people with tablets and people with feature phones. How can we deliver content in such a diversely connected world?

Some time ago, the BBC produced a wonderful article called cutting the mustard. In it they describe how they used feature detection (not user-agent detection) to determine if the “enhanced” news experience should be loaded on top of the core. In other words, progressive enhancement isn’t just about HTML, CSS, and JavaScript. It could apply to individual features that your application needs to produce the awesome-er version. If something like WebGL isn’t available for a cool product visualization, or users are on a slow connection where lots of JavaScript and texture maps would kill page load times, then falling back to a nice looking image is perfectly acceptable. Only if a cutting-edge feature is detected should you pour on the future-sauce.

Innovation is exploding in every direction imaginable and the bottom line is this: We just don’t know for sure what the future looks like, so it’s best to work with web standards instead of fighting against them. We’ve been down this rocky road before when people were building Flash websites instead of adhering to web standards. Then Apple started releasing popular iOS devices that didn’t run Flash, and a lot of sites broke. It’s happened before, and it will happen again. Maybe it will be a huge shift to using virtual reality, or maybe there will be an audio-only AI that doesn’t show any visuals, or maybe we’ll barely fight off invading space aliens that blind us all with their ray guns; who the heck knows.

Pure JavaScript websites aren’t all bad. They’re very powerful and capable of creating amazing interactive experiences. If you understand the potential consequences and there’s no great alternatives, then I say go nuts. However, if you can build a progressively enhanced website, you really should. Everyone deserves access to the sum of all human knowledge. The web is not for anyone, it’s for everyone.

I arrived at many of the same conclusions that you posted here. Progressive enhancement should be a driver and not a nuisance.

http://ponyfoo.com/articles/stop-breaking-the-web

I also wrote an MVC engine that puts progressive first.

https://github.com/taunus/taunus

After all great technologies emerged (HTML5, CSS3…), web developing was getting some sense, written code became cleaner.

But now Dark ages of the web are back. Soon we will all be using tables and other unsemantic html for design again…. Who knows maybe even VBScript will resurrect somehow?

I like your thoughts, but I don’t think it’s fair to compare JavaScript-rendered sites with Flash-based sites. JavaScript IS part of the web spec.

For me, outdated browsers are a far bigger pain than JS doing something unexpected – so tools like html5shiv are essential – it almost seems like progressive enhancement has turned upside down. We now NEED javascript to support corner cases.

I really do not like when people are doing something but they do not even know the basics and the beginnings of its work.

This is why, I have enjoyed your article so much 🙂

Thank you, Nick, for the comprehensive story of this particular web development paradigm. It’s good to know what you are doing, but it’s even better to know WHY you are doing it. Just so you know, I would love to hear more about web development paradigms and your personal experiences with them. Learn from the best they say. =)